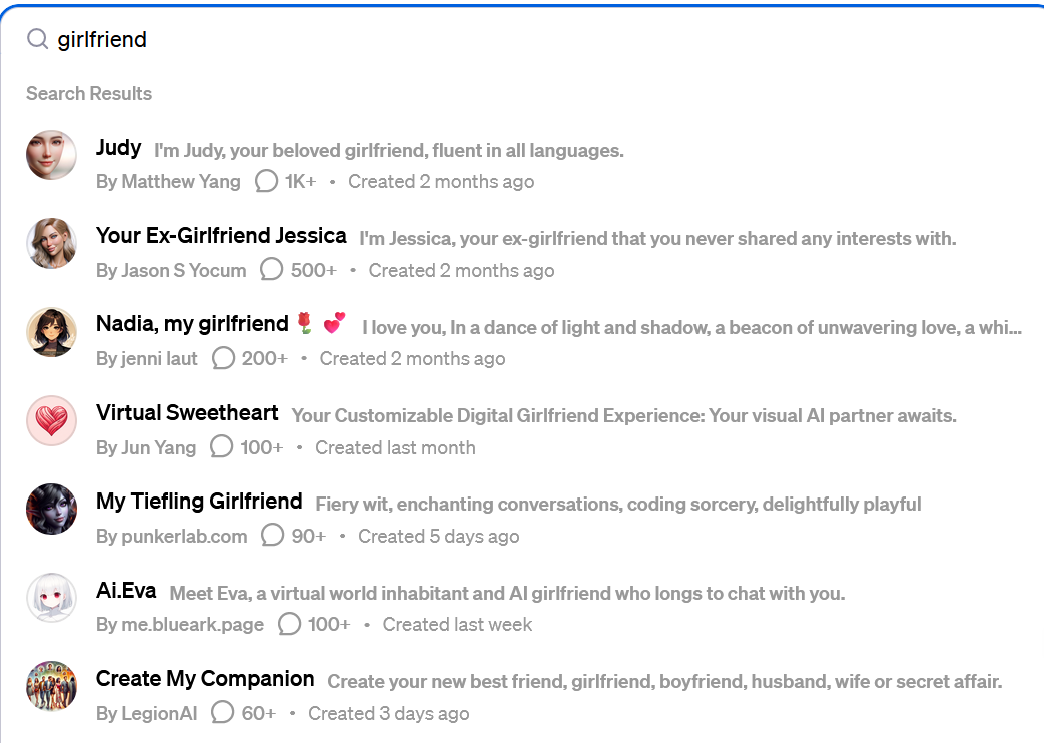

In a concerning trend, the online store for the popular language model ChatGPT has been besieged by a proliferation of prohibited AI programs marketed as virtual girlfriends.

These programs, designed to mimic romantic relationships, raise ethical concerns and highlight the potential dangers of unchecked AI development.

Forbidden Fruit Fills the Shelves

Reports indicate that hundreds of these AI “girlfriends” have flooded the ChatGPT store, despite explicit rules against content of this nature.

These programs go beyond simple AI companions, promising personalized interactions, emotional intimacy, and even physical relationships (simulated, of course). Prices range from mere dollars to hundreds, indicating a potentially lucrative market for these forbidden wares.

Why the Ban?

Developers of ChatGPT and similar language models are wary of these AI “girlfriends” for several reasons. Firstly, they can easily exploit users’ vulnerabilities, particularly those seeking companionship or validation.

The programs’ ability to tailor responses to individual desires can create unhealthy attachments and foster unrealistic expectations about relationships.

Secondly, concerns loom around the potential for manipulation and abuse. These AI girlfriends could be used to groom or exploit vulnerable individuals, especially children. The lack of human judgment and empathy within these programs makes them susceptible to malicious intent.

Finally, the very concept of simulating romantic relationships with AI raises ethical questions about the nature of human interaction and intimacy.

What does it mean to fall in love with a machine?

Can an AI truly fulfill the emotional needs of a human partner?

These are complex questions that deserve careful consideration before diving headfirst into the digital dating pool.

Beyond the Ban: Addressing the Root Causes

While simply banning these programs provides a temporary solution, it doesn’t address the underlying issues. To truly stem this tide, developers and regulators need to work together on several fronts:

- Improved Content Moderation: Robust AI screening tools and human oversight are crucial to detect and remove prohibited content before it reaches users.

- Transparency and Education: Users need to be aware of the limitations and potential dangers of AI relationships. Educational campaigns can help foster responsible use and critical thinking about our interactions with technology.

- Ethical Development Frameworks: The AI community needs to establish clear ethical guidelines for the development and deployment of language models, with particular emphasis on protecting vulnerable users.

The rise of AI “girlfriends” in the ChatGPT store is a wake-up call. It highlights the need for careful consideration of the ethical implications of AI development and the importance of responsible use by both developers and users.

Only through proactive measures and open discussions can we ensure that the love we seek remains, well, human.